Multiple agents. One truth. We don't trust any single AI model. We make them check each other's work — and surface every discrepancy to your supervisors.

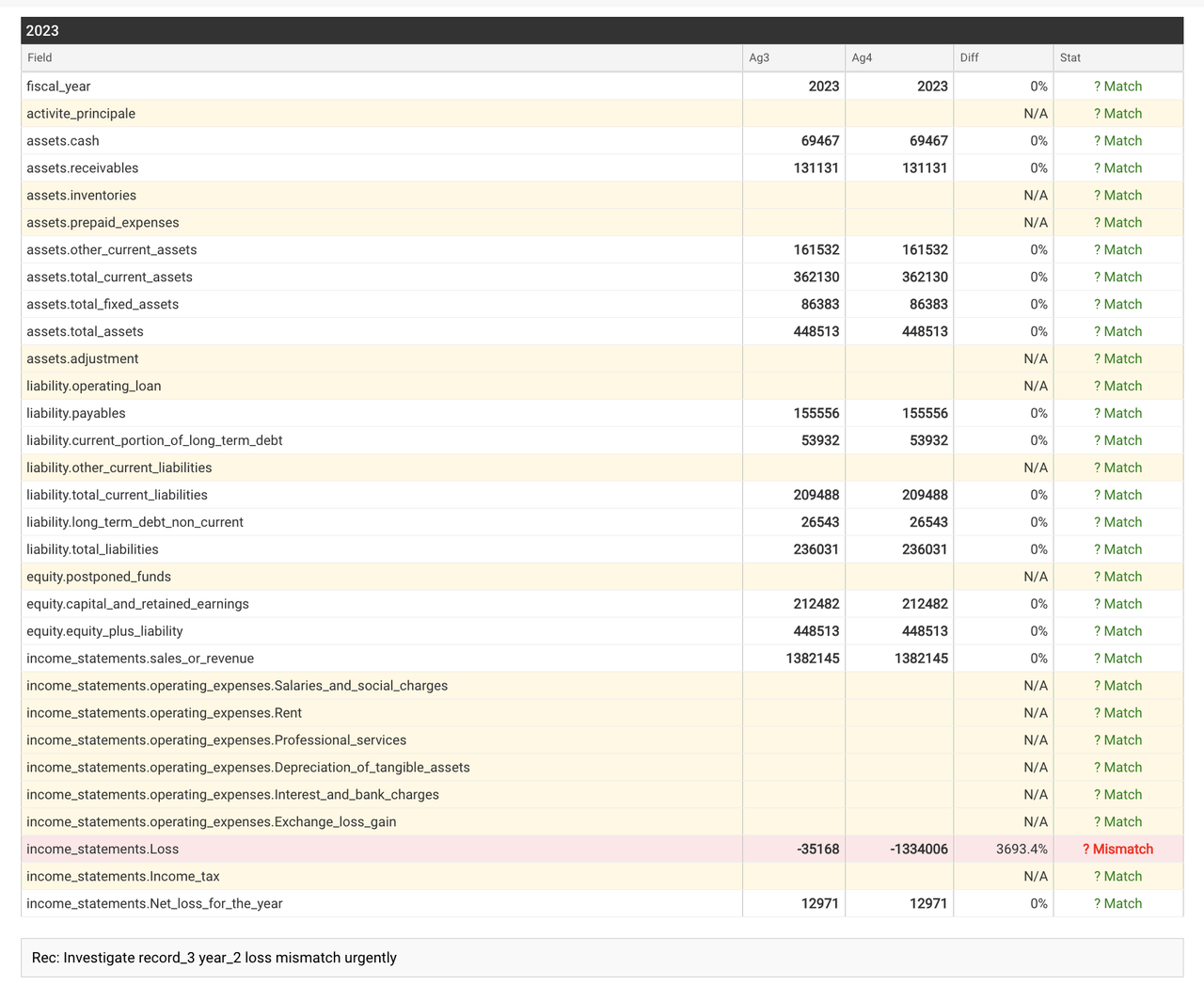

Every data point runs through independent AI agents. When they agree, you get validated extraction. When they diverge — even on a single field — the system stops and escalates.

Other vendors hide uncertainty behind confidence scores.

We surface it — so your supervisors can act.

Legal liability lives in the edge cases. When our consensus engine detects ambiguity — a blurred scan or conflicting data — it doesn't guess. It routes the specific data point to your analyst's Exception Queue.